We have come a long way in developing and training complex machine learning and deep learning models. Though we deploy them on cloud platforms to perform useful tasks in real-time, sometimes you need to deploy them on edge devices for various reasons like better response time, cost, data privacy, etc. That’s where Tiny Machine Learning (TinyML) comes into play.

In this article, we will discuss TinyML and its role today. Learn what it is, why it’s important, and its various applications.

What is Tiny Machine Learning (TinyML)?

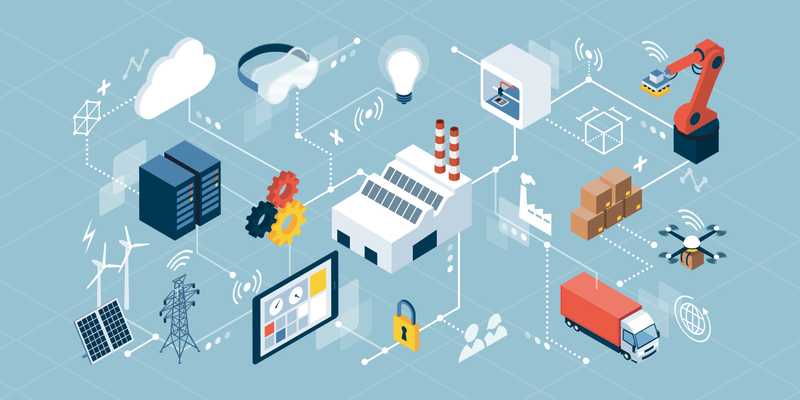

TinyML is where the embedded internet of things (IoT) and machine learning (ML) intersect. In other words, TinyML is a technology that can be used to develop embedded low power consuming devices to run both machine and deep learning models.

Why is TinyML important?

TinyML plays a significant role in connecting the IoT devices and machine learning community. The technology can change how the IoT devices interact and process the data. Traditionally, the IoT devices sent the data to the cloud where the hosted machine learning models make inferences and provide insights. With TinyML, it is possible to deploy machine learning models on the IoT devices to get inference and insight without sending data to the cloud.

This is crucial for many reasons, especially these four:

- Data privacy. When you transmit data from edge devices to the cloud, your data becomes vulnerable—it could even be used for malicious acts. But keeping your data on the edge devices reduces this risk and strengthens your data privacy.

- Cost. Transmitting continuous streams of data to the cloud incurs high storage and infrastructure costs; also, most of the data coming from the edge devices is insignificant. For example, you wouldn’t want your home security camera to save every frame—instead, you’d just need it to save frames that capture activity. Like this, saving only necessary data can reduce expenses.

- Fast inference (latency). Completing all processing and inferencing on edge devices can help serve users with extremely low latency. For example, devices like Google Home Mini must send some data to the cloud to get a response. But in the case of an unstable network, this can result in high latency that can only be resolved by making inferences on the device itself. In addition, low latency will open up the road to brand new usage of ML, which was not possible until now due to the occasional high latency response (eg. models affecting the safety of humans).

- Reliability. Due to a more distributed architecture, you don’t have to rely on one model for all predictions. Also, it reduces the possibility of DOS attack against prediction API, as no direct cloud connectivity is in place and models are distributed on edge devices.

These reasons are similar to what led web applications to go from monolithic (traditional) to distributed architectures, supported by content distribution networks (CDNs), edge web workers, as well as a lot more code to run directly on the client’s device (browser or mobile app).

Get To Know Other Data Science Students

Jonah Winninghoff

Statistician at Rochester Institute Of Technology

Karen Masterson

Data Analyst at Verizon Digital Media Services

Samuel Okoye

IT Consultant at Kforce

Applications of TinyML

Here are five potential applications of TinyML:

- Voice commands. Smartphones actively listen to wake words like “Hey Google” or “Hey Siri.” Not surprisingly, the models that detect wake words are not run on the usual mobile CPU, as it consumes lots of power and quickly drains the battery with continuous use. Instead, with TinyML, these models are run on specialized low-power hardware inside the smartphone, which can run regularly and consume very little energy.

- High-quality imagery. Microsatellite applications can high-quality images and transfer them to Earth. These satellites feature fixed storage and can save a limited number of pictures. TinyML can help to capture pictures only when an object of interest is in sight.

- Equipment maintenance. In manufacturing companies, TinyML can help to avoid downtime due to equipment failure by alerting technicians when equipment conditions go wrong as part of preventive maintenance.

- Monitor retail stock. In retail stores, TinyML can help to monitor the stock of the products on shelves and send alerts when it’s low. This helps businesses manage their inventory and satisfy their customers.

- Increase crop yields. As we all know that data science provides actionable insights for the agricultural development and so as the TinyML-based smart sensors can be used in greenhouses that continuously monitor parameters like pH, humidity, CO2 level, and send alerts when the metrics deviate from the ideal range. TinyML can also help farmers fight animal illness via wearable devices that monitor the animals’ parameters—like heart rate, temperature, and so on—and alert farmers when the parameters cross certain thresholds.

Challenges with TinyML

Though the development of TinyML has brought many encouraging outcomes, the machine learning industry still faces some significant challenges.

- Limited memory. Traditional platforms like smartphones and laptops have memory measuring in gigabytes, but TinyML devices measure memory in kilobytes or megabytes. This constrains the size of the model that can be deployed.

- Hardware variety. There are many possibilities for hardware selection. TinyML platforms can range from general-purpose microcontrollers to novel systems like neural processors. This creates problems for model deployment on various architectures.

- Software variety. Different methods to deploy models on TinyML devices include hand-coding, code generation, and ML interpreters, all of which require different amounts of time and effort. This can also result in different performance.

- Model training. Although deploying ML models on TinyML devices has many benefits, the training of the majority ML models is still done in the cloud to iterate and continuously improve the accuracy of the model.

- Debugging/troubleshooting. When an ML model performs poorly in the cloud, it is easy to see the presented data and determine the cause of the bad performance. However, when a model is distributed to thousands of TinyML devices, often with no data stream coming back to the cloud, it is hard to debug and might require a new approach.

TinyML is still in its early stages but it is expected to grow significantly by the contribution of ML engineers and Data Scientists in the next few years in the machine learning industry.

Since you’re here…Are you interested in this career track? Investigate with our free guide to what a data professional actually does. When you’re ready to build a CV that will make hiring managers melt, join our Data Science Bootcamp which will help you land a job or your tuition back!