Random forests and decision trees are tools that every machine learning engineer wants in their toolbox.

Think of a carpenter. When a carpenter is considering a new tool, they examine a variety of brands—similarly, we’ll analyze some of the most popular boosting techniques and frameworks so you can choose the best tool for the job.

This article will guide you through decision trees and random forests in machine learning, and compare LightGBM vs. XGBoost vs. CatBoost.

*Looking for the Colab Notebook for this post? Find it right here.*

What is a decision tree in machine learning?

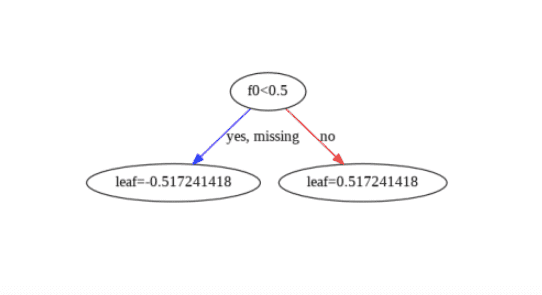

If you are an aspiring data scientist and involving with machine learning, decision trees may help you produce clearly interpretable results and choose the best feasible option. Let’s start by explaining decision trees. Let’s start by explaining decision trees. Decision trees are a class of machine learning models that can be thought of as a sequence of “if” statements to apply to an input to determine the prediction.

In greater rigor, a decision tree incrementally constructs vertices within a tree that represent a certain “if” statement and has children vertices connected to the parent by edges representing the possible outcomes of the parent vertex if condition (in decision tree lingo, this is referred to as the cut).

Eventually, after some sequence of “if” statements, a tree vertice will have no children but hold a prediction value instead. Decision trees can learn the “if” conditions and eventual prediction, but they notoriously overfit the training data. To prevent overfitting, oftentimes decision trees are purposefully underfit and cleverly combined to reach the right balance of bias and variance.

What are random forests in machine learning?

Now we’ll explore random forests, the brainchild of Leo Breiman. Random forests are a type of ensemble learning or a collection of so-called “weak learner” models whose predictions are combined into a single prediction.

In the case of random forests, the collection is made up of many decision trees. Random forests are considered “random” because each tree is trained using a random subset of the training data (referred to as bagging in more general ensemble models), and random subsets of the input features (coined feature bagging in ensemble model speak), to obtain diverse trees.

Bagging decreases the high variance and tendency of a weak learner model to overfit a dataset. For random forests, both types of bagging are necessary. Without both types of bagging, many of the trees could create similar “if” conditions and essentially highly correlated trees.

Instead of bagging and creating many weak learner models to prevent overfitting, often, an ensemble model may use a so-called boosting technique to train a strong learner using a sequence of weaker learners.

In the case of decision trees, the weaker learners are underfit trees that are strengthened by increasing the number of “if” conditions in each subsequent model.

XGBoost, CatBoost, and LightGBM have emerged as the most optimized boosting techniques for gradient-boosted tree algorithms. Scikit-learn also has generic implementations of random forests and gradient-boosted tree algorithms, but with fewer optimizations and customization options than XGBoost, CatBoost, or LightGBM, and is often better suited for research than production environments.

Each of XGBoost, CatBoost, and LightGBM have their own frameworks, distinguished by how the decision tree cuts are added iteratively.

Get To Know Other Data Science Students

Bret Marshall

Software Engineer at Growers Edge

Esme Gaisford

Senior Quantitative Data Analyst at Pandora

Isabel Van Zijl

Lead Data Analyst at Kinship

LightGBM vs. XGBoost vs. CatBoost

LightGBM is a boosting technique and framework developed by Microsoft. The framework implements the LightGBM algorithm and is available in Python, R, and C. LightGBM is unique in that it can construct trees using Gradient-Based One-Sided Sampling, or GOSS for short.

GOSS looks at the gradients of different cuts affecting a loss function and updates an underfit tree according to a selection of the largest gradients and randomly sampled small gradients. GOSS allows LightGBM to quickly find the most influential cuts.

XGBoost was originally produced by University of Washington researchers and is maintained by open-source contributors. XGBoost is available in Python, R, Java, Ruby, Swift, Julia, C, and C++. Similar to LightGBM, XGBoost uses the gradients of different cuts to select the next cut, but XGBoost also uses the hessian, or second derivative, in its ranking of cuts. Computing this next derivative comes at a slight cost, but it also allows a greater estimation of the cut to use.

Finally, CatBoost is developed and maintained by the Russian search engine Yandex and is available in Python, R, C++, Java, and also Rust. CatBoost distinguishes itself from LightGBM and XGBoost by focusing on optimizing decision trees for categorical variables, or variables whose different values may have no relation with each other (eg. apples and oranges).

To compare apples and oranges in XGBoost, you’d have to split them into two one-hot encoded variables representing “is apple” and “is orange,” but CatBoost determines different categories automatically with no need for preprocessing (LightGBM does support categories, but has more limitations than CatBoost).

LightGBM vs. XGBoost vs. CatBoost: Which is better?

All of LightGBM, XGBoost, and CatBoost have the ability to execute on either CPUs or GPUs for accelerated learning, but their comparisons are more nuanced in practice. Each framework has an extensive list of tunable hyperparameters that affect learning and eventual performance.

First off, CatBoost is designed for categorical data and is known to have the best performance on it, showing the state-of-the-art performance over XGBoost and LightGBM in eight datasets in its official journal article. As of CatBoost version 0.6, a trained CatBoost tree can predict extraordinarily faster than either XGBoost or LightGBM.

On the flip side, some of CatBoost’s internal identification of categorical data slows its training time significantly in comparison to XGBoost, but it is still reported much faster than XGBoost. LightGBM also boasts accuracy and training speed increases over XGBoost in five of the benchmarks examined in its original publication.

But to XGBoost’s credit, XGBoost has been around the block longer than either LightGBM and CatBoost, so it has better learning resources and a more active developer community. The distributed Gradient Boosting library uses parallel tree boosting to solve numerous data science problems quickly and accurately. It also doesn’t hurt that XGBoost is substantially faster and more accurate than its predecessors and other competitors such as Scikit-learn.

Each boosting technique and framework has a time and a place—and it is often not clear which will perform best until testing them all. Fortunately, prior work has done a decent amount of benchmarking the three choices, but ultimately it’s up to you, the engineer, to determine the best tool for the job.

Since you’re here…

Curious about a career in data science? Experiment with our free data science learning path, or join our Data Science Bootcamp, where you’ll get your tuition back if you don’t land a job after graduating. We’re confident because our courses work – check out our student success stories to get inspired.